PlayDiffusion - Next-Generation AI Voice Inpainting Technology

Transform your audio editing experience with PlayDiffusion's advanced diffusion-based approach. Edit speech naturally, maintain context, and achieve seamless transitions with our cutting-edge AI technology.

Try PlayDiffusion Live Demo

Experience the power of PlayDiffusion directly in your browser. Our interactive demo allows you to test voice inpainting and speech editing capabilities in real-time.

Interactive PlayDiffusion Demo

Why Choose PlayDiffusion

Advanced Diffusion Technology

Leverage our novel diffusion-based approach for natural speech editing. PlayDiffusion maintains context and speaker characteristics while enabling precise audio modifications.

Seamless Audio Inpainting

Edit portions of generated audio without discontinuity artifacts. PlayDiffusion ensures smooth transitions and consistent voice characteristics across edited segments.

Efficient Non-Autoregressive Generation

Experience up to 50x faster generation compared to traditional models. PlayDiffusion's non-autoregressive approach produces high-quality audio in fewer steps.

Context-Aware Editing

Preserve surrounding context while modifying specific segments. PlayDiffusion's advanced architecture ensures natural-sounding results with perfect transitions.

Speaker Consistency

Maintain consistent speaker characteristics across edits. PlayDiffusion's speaker conditioning ensures voice identity remains stable throughout modifications.

Open Source Availability

Access PlayDiffusion's source code and model weights on Hugging Face. Join our community of developers and researchers advancing voice AI technology.

What Experts Say About PlayDiffusion

PlayDiffusion represents a significant advancement in voice AI technology. Its diffusion-based approach solves long-standing challenges in audio inpainting.

Dr. Alex Chen

AI Researcher

The seamless transitions and context preservation in PlayDiffusion are remarkable. It's revolutionizing how we approach voice editing.

Sarah Martinez

Audio Engineer

PlayDiffusion's non-autoregressive architecture offers impressive efficiency gains without compromising quality. A game-changer for voice synthesis.

Prof. James Wilson

Computer Science

The open-source nature of PlayDiffusion makes it accessible to researchers and developers worldwide. Excellent contribution to the AI community.

Emily Zhang

AI Developer

PlayDiffusion's speaker conditioning ensures remarkable consistency in voice characteristics. A crucial advancement for voice AI applications.

Dr. Michael Brown

Speech Technology

The performance improvements in PlayDiffusion are substantial. It's setting new standards for efficiency in voice synthesis and editing.

Lisa Thompson

Tech Lead

Frequently Asked Questions

PlayDiffusion is an advanced AI voice model that uses diffusion-based technology for natural speech editing and inpainting. It enables precise modifications of audio segments while maintaining context and speaker characteristics.

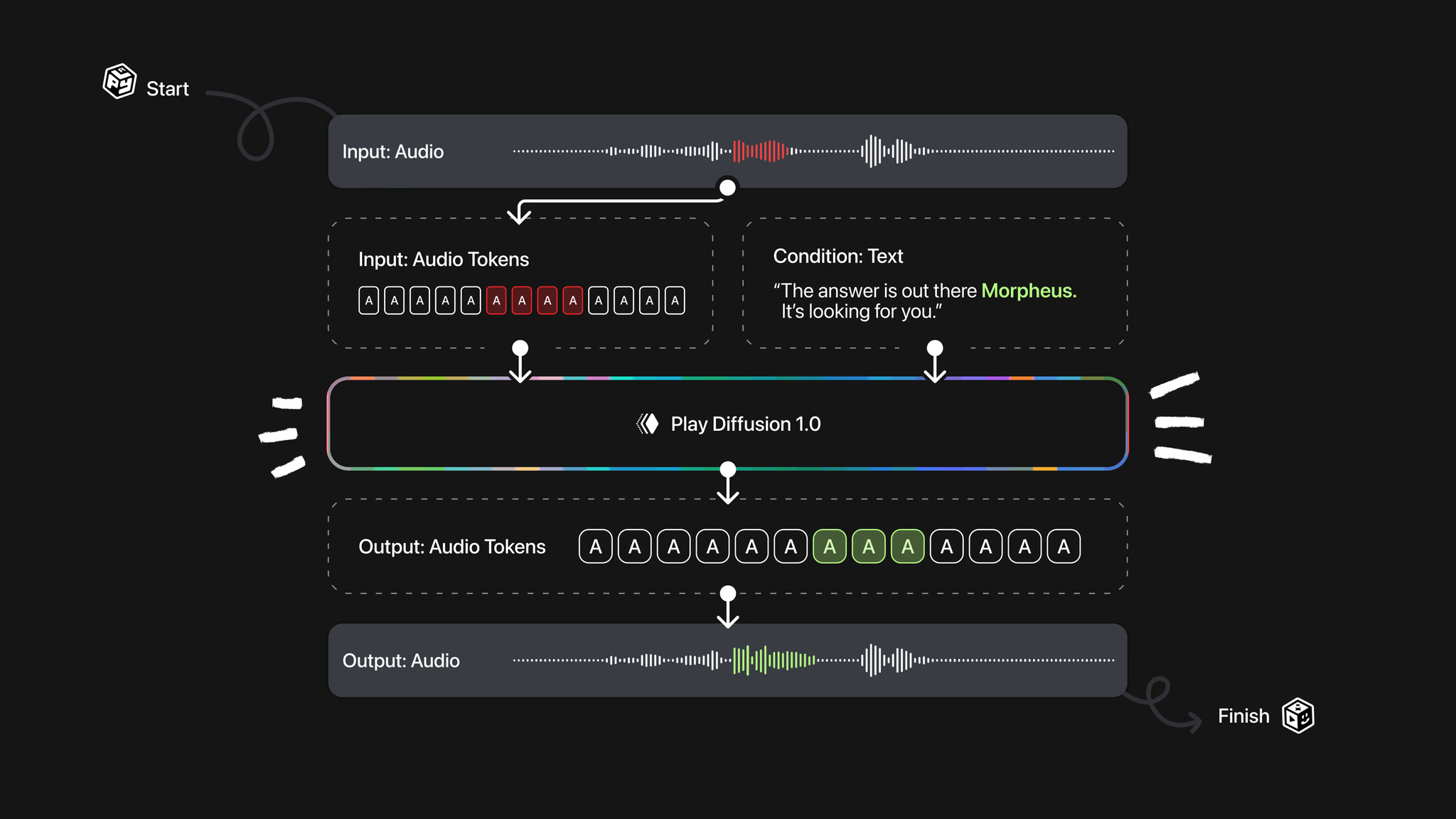

PlayDiffusion uses a novel diffusion-based approach that encodes audio into discrete tokens, masks the target segment, and employs a diffusion model to denoise the masked region while preserving surrounding context. The result is then transformed back to speech using our BigVGAN decoder.

PlayDiffusion's key differentiators include its non-autoregressive architecture (up to 50x faster generation), seamless context preservation, and advanced speaker conditioning. Unlike traditional models, it can edit portions of audio without discontinuity artifacts.

You can experience PlayDiffusion through Play Studio or access the source code and model weights on Hugging Face. The model is open-source and available for researchers and developers.

PlayDiffusion excels in voice editing, speech inpainting, and text-to-speech applications. It's particularly effective for modifying specific segments of audio while maintaining natural transitions and speaker consistency.

Yes, PlayDiffusion's efficient non-autoregressive architecture makes it suitable for real-time applications. Its optimized token generation process significantly reduces computational requirements while maintaining high quality.